AI is radically changing the practice of law, but human interaction is crucial, experts say

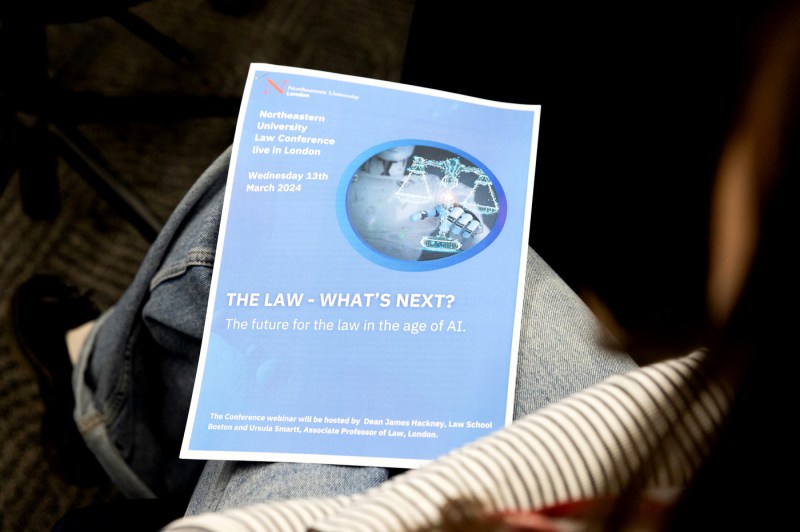

“The Law — What’s next? The future for law in the age of AI” was led by Ursula Smartt, an associate professor of law at Northeastern’s London campus.

LONDON — A high-profile example of the risks of using artificial intelligence made headlines last year when Steven Schwartz, a personal injury lawyer in New York, admitted to using ChatGPT to prepare a court filing.

Unfortunately for him, the filing included made-up case details and quotes, and became a widespread conversation starter about the ways AI can be misused in the legal profession.

The Schwartz case was mentioned several times during a recent Northeastern University conference, “The Law — What’s next? The future for law in the age of AI.”

Ursula Smartt, an associate professor of law at Northeastern’s London campus, said generative AI is already radically changing the practice of law.

For example, legal AI companies such as Harvey or Kira have software that can draft skeleton arguments for court cases and provide time-saving provisions in contract law, Smartt said.

“The next cohort of our law graduates entering the workplace will no doubt find AI chatbots in place, which means … we should incorporate such training into our syllabuses,” she said.

Smartt struck an optimistic tone about the power of AI. It might “take the tedium out of lawyering,” she said.

At the same time, Smartt said she’s “already witnessed that ChatGPT can present pitfalls,” referring to the Schwartz case.

But it wasn’t the use of AI that was the issue, she said, it was that Schwartz didn’t check the information that was provided.

“Blaming AI for legal errors is as nonsensical as blaming it for scratching my Mini Cooper car as I sit back reading a newspaper having asked the self-park tool to park it in a narrow space,” she said. “The fault lies with the lawyer who fails to check the motion before filing it.”

“The Law — What’s next? The future for law in the age of AI” was held at the Devon House and streamed live to Northeastern’s 13 global campuses. It brought together senior lawyers, barristers and judges, as well as law graduates working in the field.

Shona Coffer, one of the youngest partners at the global magic circle law firm Mishcon de Reya LLP kicked off a session on data, ethics and trust by explaining ways AI is saving time in her casework. Her message was especially relevant to law students.

“Law is great,” she said. “And it’s going to be even better for you because you are not going to suffer years and years of utter dross that we had, page-turning of documents and disclosure, which can now be done rapidly thanks to generative AI.”

Coffer said she is less impressed by ChatGPT, particularly when it comes to drafting documents for litigation. You can’t tell a robot to draft specifically for a High Court judge in London. In fact, her firm, Mishcon, has recently developed its own “chat” AI software, which pays particular attention to the data protection and GDPR laws regarding their clients.

Editor’s Picks

“The Schwartz case wasn’t ChatGPT’s fault. It was user error,” Coffer said. “But I do worry about the quality of drafting that is coming up through the legal system. Because if you haven’t read the materials and it’s AI-generated, all you’re doing is moving it around. So you’re not learning.”

Eva Bernardo Haro-Harris, a Northeastern law graduate and former student of Smartt’s, is a trainee solicitor at Cantourage. She spoke about the benefits and challenges of being part of Generation Z, the first generation to grow up entirely with the internet, and how her interests in YouTube and coding helped her legal career.

“[People] expect you to know about these technologies when you’re younger,” she said. “They want to know how they can use the magical power of TikTok and algorithms to make a company more profitable, but in a legal way. And it’s true, we do have this understanding.”

Gill Phillips, The Guardian newspaper’s director of editorial legal services, advised conference attendees on the reporting of stories related to WikiLeaks, Edward Snowden, the Trafigura superinjunction case and the Cambridge Analytica scandal.

She described herself as “Generation Luddite” and explained just how far technology has evolved since she began her career in the 1970s.

“When I started in private practice, we had a mainframe computer that was about the size of this room,” she said. “I spent a lot of my time sitting on the sofa, reading one end of a lease to an article clerk.”

Jeff Blackett, a recently retired judge advocate general for the British military courts martial, enlightened the audience about media attacks on the U.K. judiciary and how this might threaten democracy. He explained the threats he received as he presided over the complex case of “Marine A” Sergeant Blackman, who shot and killed an Afghan insurgent in Helmand province.

Northeastern law graduates (also Ursula Smartt’s former students) Khanjana Gola and Viren Thandi talked about the legal risks of relying on a hypothetical “AI lawyer” and the potential ramifications of the rise of deepfake technology.

James Hackney, dean of the Northeastern School of Law in Boston, told the audience that “generative AI promises to be both disruptive and additive to education around the legal profession.”

Naomi Goulder, deputy dean of Northeastern’s London campus, said the positives of AI far outweigh the negatives.

“Whatever we may think about AI in society, and there’s definitely some skepticism, there seems to be an uplifting message for people going into the law today … that you can use it as a tool that will allow you to spend time on more interesting work,” she said.