Professor designs algorithms to make robots better collaborators

Fully autonomous robots, such as drones and self-driving cars, are no longer a thing of the future. But hurdles remain before they completely integrate into our daily lives, delivering packages to our doorsteps, helping first responders, and assisting the elderly.

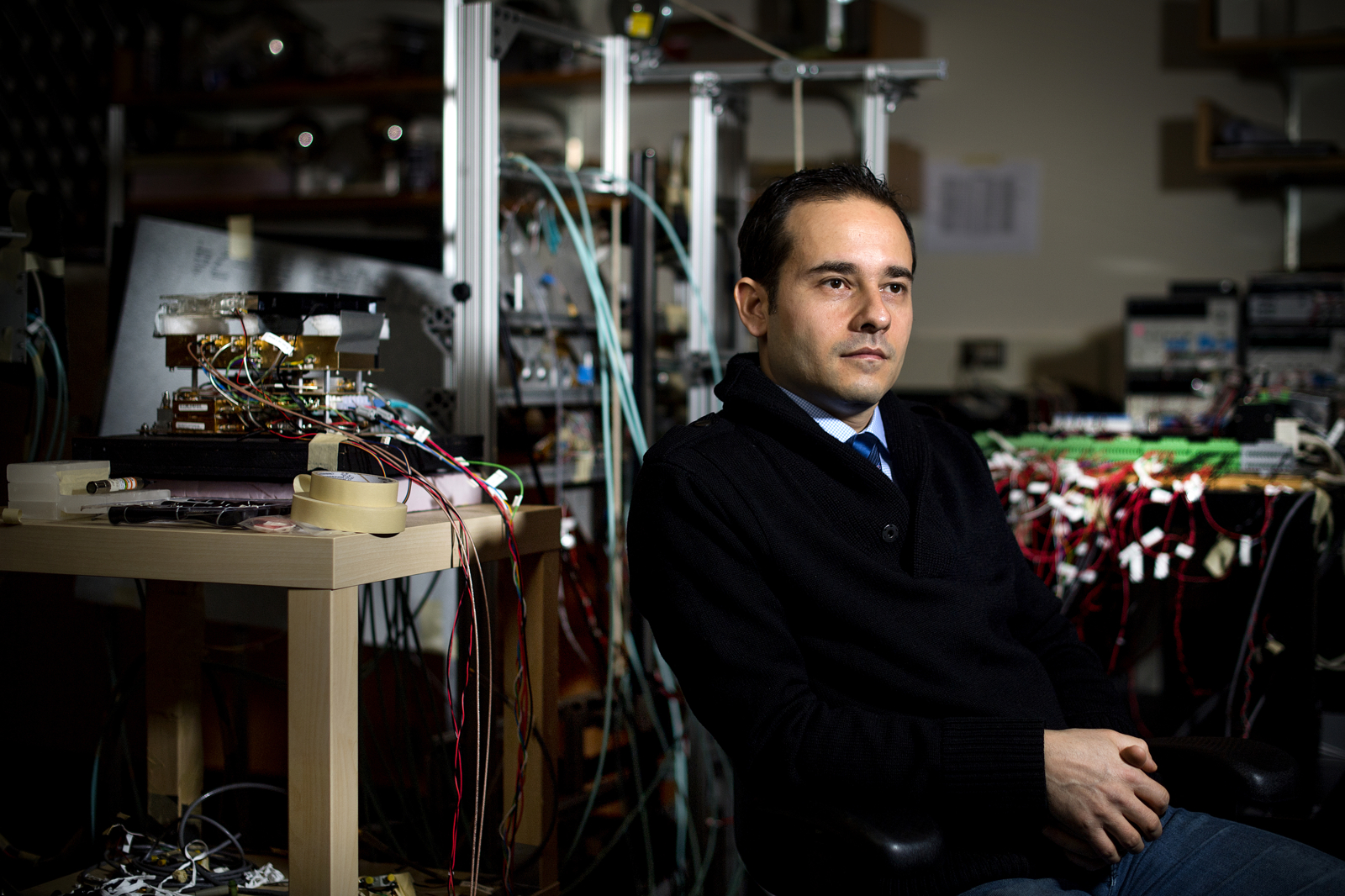

The potential of a group of autonomous agents to perform complex tasks in a well-coordinated manner distinguishes the research of Rifat Sipahi, associate professor of mechanical and industrial engineering at Northeastern. He designs algorithms that enable groups of robots to make good decisions despite inherent communication delays in information sharing.

Such delays—from minutes, down to fractions of a second—can be detrimental to group behavior, especially when these robots have access to and can act only on “outdated” information. But Sipahi is working to overcome that challenge. As he put the problem: “How do we design a sophisticated protocol that can tell robots in which direction to move to perform a collaborative task when the only information they have is outdated data about their peers and themselves?”

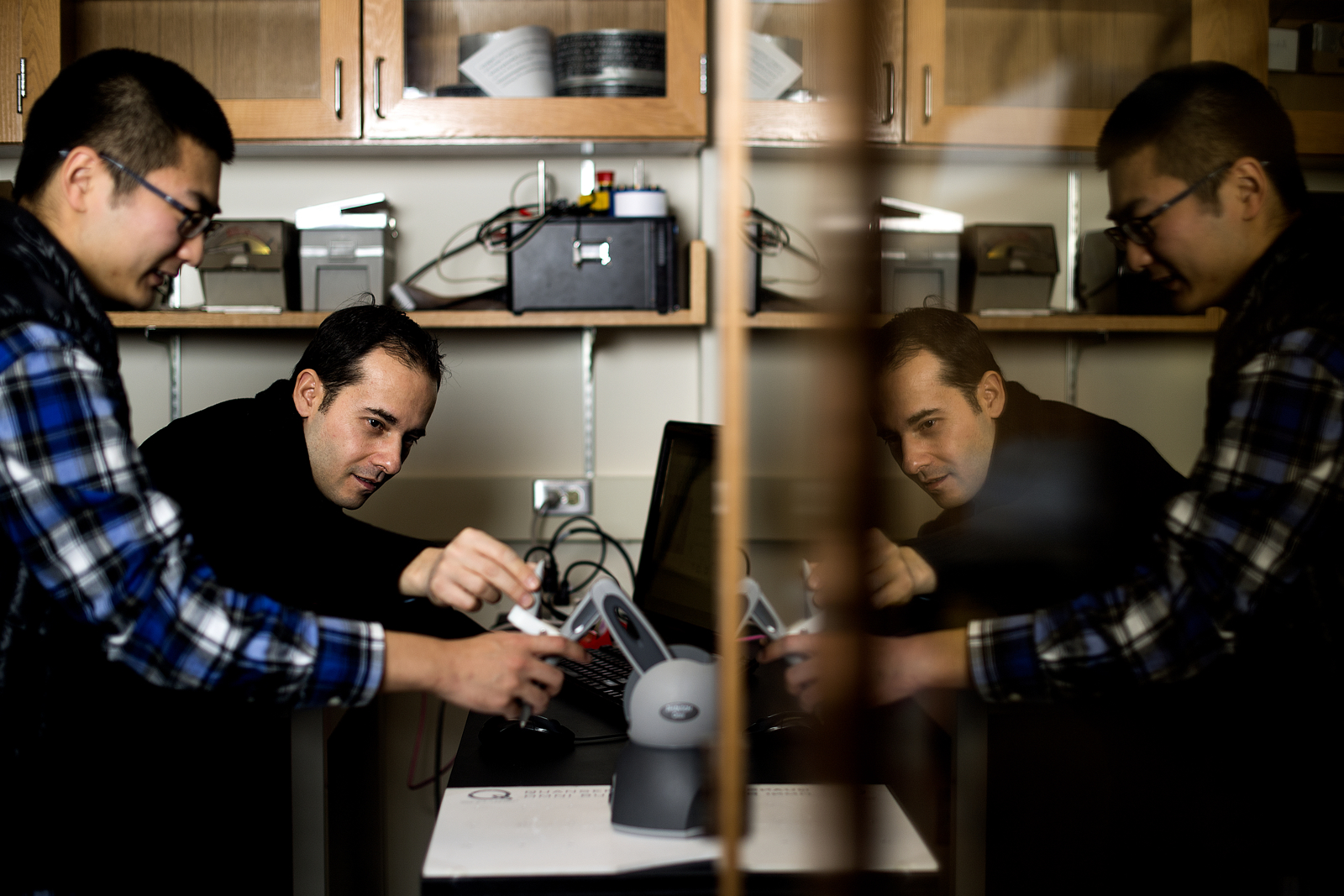

In 2015, Sipahi and his research partner Wei Qiao, PhD’13, made some headway, designing an algorithm capable of both improving and quickening cooperation among a trio of robots with information-sharing delays. The research—supported by a National Science Foundation grant and presented in a paper published in IEEE Transactions on Control Systems Technology—could potentially inform future solutions to minimizing the detriments of communication delays in tasks performed by collaborative robots.

“Cooperation of these robotic systems is critical,” Sipahi explained. “What we showed is that these robots, each with no immediate information about their peers’ present locations, could still coordinate their motions and convene at a location of their choosing in a totally autonomous way. We can even modulate within the algorithm their speed of achieving the rendezvous mission.”

On the road

Sipahi is now working to understand how human drivers navigate highways in the presence of autonomous vehicles, the first step in a decade-long project to improve traffic flow. Using control theory and human factors engineering, he ultimately wants to design algorithms that would enable self-driving cars to collaborate with human drivers and each other, which would help reduce traffic jams among all mediums of roadway transportation.

Sipahi is collaborating with mechanical and industrial engineering associate professor Yingzi Lin, a human factors engineering expert whose lab includes a driving simulator that they hope to put to test this spring. “We’ll have some time to work up some concepts and bring them to the attention of car manufacturers” before self-driving cars become a ubiquitous presence on the road, Sipahi said. “If we want to achieve better driving conditions for everybody,” he added, “self-driving cars will need to work together and with the imperfections of human drivers.”

In the air

Sipahi is also focused on improving human-robot interaction, a topic he first explored through a 2011 Young Faculty Award from the Defense Advanced Research Projects Agency. Now it’s a major thrust of a new research collaboration that aligns with the federal government’s National Robotics Initiative. His motivation is simple, he explained, saying that “humans will remain a centerpiece of handling complex tasks together with AI and robotics systems.”

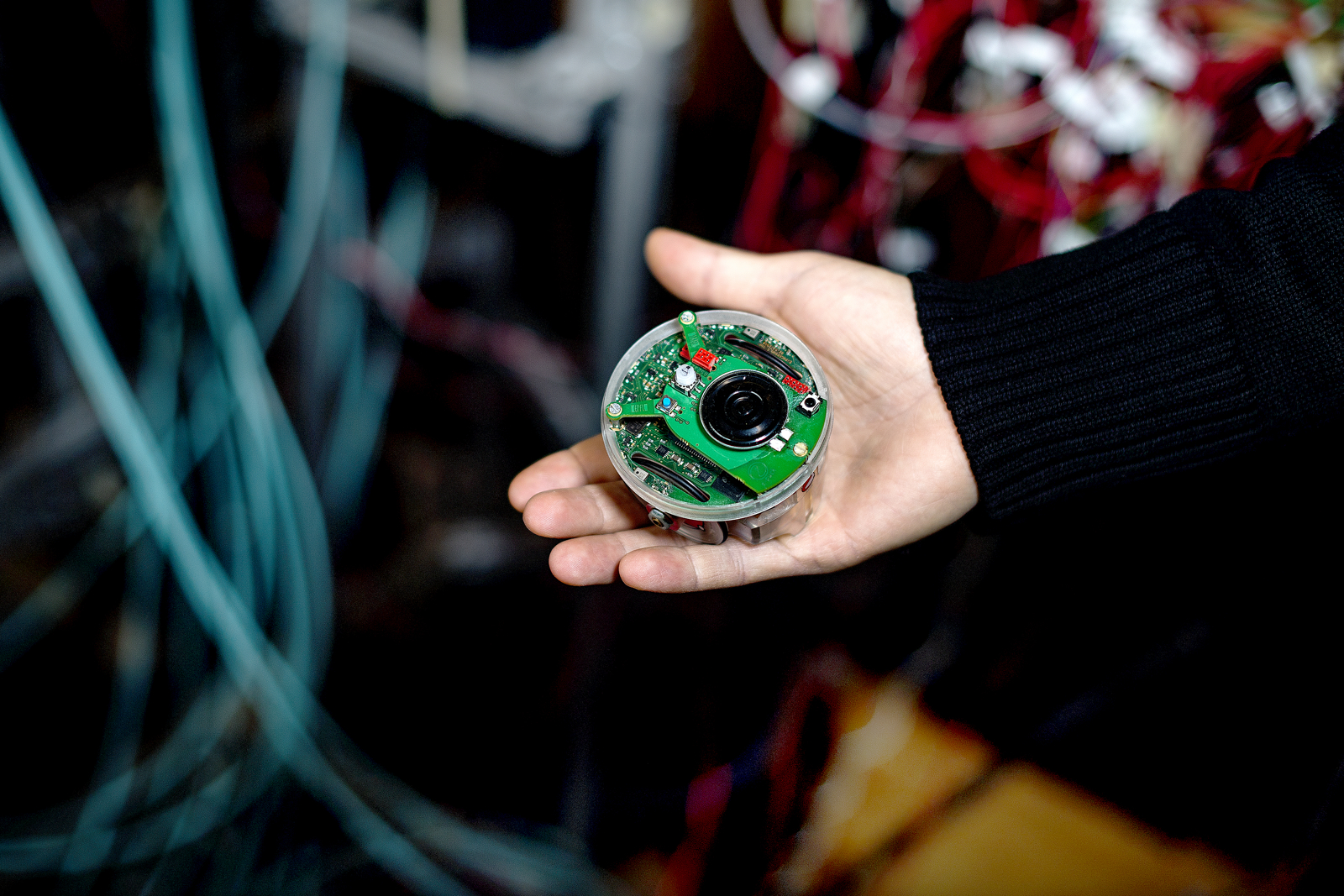

In particular, Sipahi is working with his colleagues at the University of South Florida and Bilkent University in Turkey to design an algorithm that would make it easier for a human in Location A to control a robot in Location B. Imagine that a human operator in Boston is using a joystick to control a robotic system in Turkey, whose movements are displayed via webcam. There’s lag between the time the human moves the joystick in Boston and when he sees via webcam how those actions have impacted the robot in Turkey. This makes it extremely difficult, if not impossible, for the operator to course correct if his joystick manipulation were too aggressive and had erroneously caused the robot to stray off path. The results could be disastrous, especially if the robot were undertaking a crucial mission in the real world.

To solve this dilemma, Sipahi and his research partners have begun designing a so-called adaptive control box that would modulate the signal sent from the joystick to the robot. If the human operator were too aggressive, the adaptive control box, powered by a complex algorithm, would moderate the movement. “When the human moves the joystick, the command won’t go to the robot directly,” Sipahi explained, noting that he and his colleagues will begin conducting experiments with human subjects this spring. “There will be an algorithm that looks at how he moved the joystick, and that algorithm would transfer to the robot an appropriate command to meet the human demands placed upon the robot.”

Sipahi underscored the value of uncovering how the human mind works and then harvesting human intelligence to allow robots to perform tasks that people can’t complete. “Humans and robots will need to work in a synergistic manner,” he said. “The opportunity will be to optimize this synergy by leveraging human intelligence and robots’ agility against trade-offs such as humans’ imperfections.”