Social robots see smell

“The thing that’s been missing in robotics is a sense of smell,” said biology professor Joseph Ayers.

For more than four decades, he has been working to develop robots that do not rely on algorithms or external controllers. Instead, they incorporate electronic nervous systems that take in sensory inputs from the environment and spit out autonomous behaviors. For example, his team’s robo-lobsters are designed to seek out underwater mines without following a predetermined course.

“Now people want robots to do group behavior,” said Ayers, noting that social insect colonies are the perfect model. “If you’re doing large field explorations for mines, you want to have 20 or 30 robots out there.” In order to get robots to cooperate with each other, he needs them to act like ants or bees or termites.

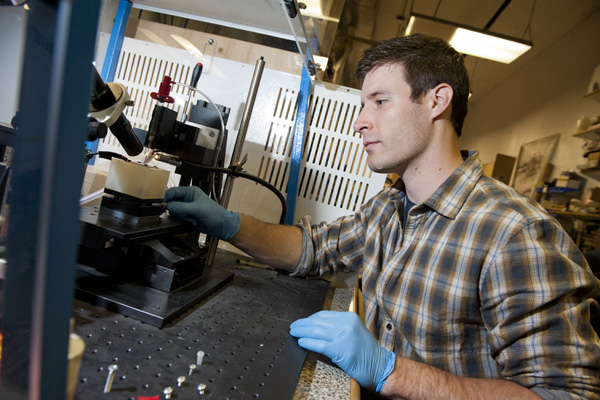

Professor Joseph Ayers is developing robots that can sense their environment, move autonomously, and interact with one another. Photo by Brooks Canaday.

Bees waggle their behinds to communicate. Ants use almost two dozen scent glands, depositing a trail of “stinks” as they go about their business. It’s this behavior that Ayers wants to mimic in his next generation of biomimetic robots.

To do so, he needs electronic devices that can sense chemical inputs, such as explosives. His idea is to integrate various microelectronic sensors that can interface with living cells. For example, a bacterial cell programmed to bind odorants in the environment may elicit a conformational change; that change may translate to an influx of calcium ions, which are detected by a second cell that is programmed to generate light when bound to calcium. In this way, Ayers said, “you can see smell.”

That output would then trigger microelectronic actuators that tell the robot to perform a particular action, such as moving toward or away from the stimulus.

But in order for any of this to play out, somebody needs to build these futuristic devices.

Enter bioengineering graduate student Ryan Myers, who built one of the world’s only e-jet printers for Ayers’ lab. He learned the nearly artisanal craft from Andrew Alleyne, a professor of engineering at the University of Illinois who perfected the technology. Myers’ work earned him the interdisciplinary research award at the RISE:2013 Research, Innovation, Scholarship, and Entrepreneurship Expo earlier this year.

According to Ayers, “inkjet printing is the industry standard for organic electronics.” This state-of-the-art technology is already paving the way for a new industry of inexpensive, versatile electronics, such as the curved television that debuted earlier this month.

The problem, at least for Ayers’ lab, is that inkjet printers can only deposit droplets 30 microns or larger. While that might seem sufficiently teeny to the rest of us, it’s not small enough for Ayers, who needs electronics features that are smaller than a living cell.

That’s where the “e,” for electrohydrodynamic, comes into the picture. In the case of “traditional” inkjets, a droplet is deposited onto a surface through backpressure alone. This means that some of the ink spreads out when it lands. E-jet printers incorporate a voltage potential between the printer head and the surface, as well as a small vacuum force on the other side. When the ink drops from the printer head, it is both pushed and pulled to the exact spot for which it’s intended. The technology allows them to print droplets as small as 250 nanometers.

At a fraction of the diameter of a living cell, “we can print many features per cell instead of many cells per feature,” said Ayers. That is, they can now produce microelectronics with high enough resolution to integrate with biological systems.

The research team is now hard at work printing biocompatible photodiodes, nitric oxide sensors, and photosensors to integrate into their robo-lobster and rob-lamprey projects. It’s just the next step in Ayers’ goal to create a “social-robot.”