RoboBees get smart in pollen pursuit

When a scout honeybee returns to the hive, she performs a “waggle dance,” looping and shaking her rear end in particular patterns to direct her comrades toward the jackpot of nectar and pollen she’s found. Her movements communicate the direction and distance of the nectar source, providing a vector along which the other bees can now travel. As they fly through the air, the flow of optical stimuli across their peripheral vision tells the bees how far they’ve traveled and when to turn.

The whole operation is the nuanced output of the bees’ neural circuitry, the product of eons of evolutionary optimization. Now that the honeybee population is in steep, inexplicable decline, the loss of its specialized pollination practices threatens crop viability across the globe.

But if honeybees disappear completely, a team of scientists at Northeastern, Harvard University, and CentEye, Inc. has a plan. Using Harvard’s groundbreaking pop-up manufacturing technique, the team can rapidly generate inexpensive swarms of miniature flying robots, which could some day pollinate an entire field of crops.

“But, a swarm of micro-robots could be used for a lot of different things,” said Dan Blustein, a graduate student at Northeastern’s Marine Science Center. They could be used for traffic and weather monitoring or to safely investigate a leak at a radiation plant, for instance.

“A lot of the technology of this stuff, if you can shrink it down that small, get the power requirements that low,” said post-doctoral researcher Anthony Westphal, “it really does open up a lot of windows in terms of what can be useful.”

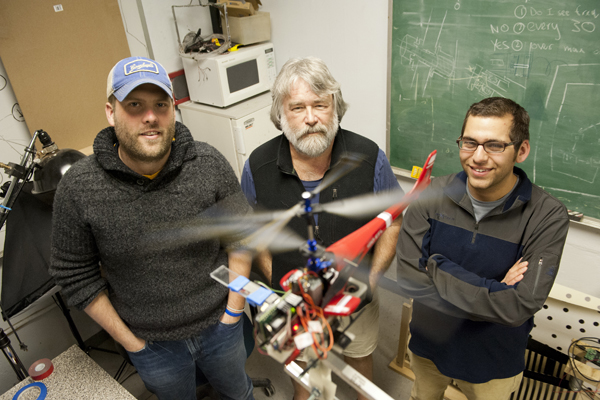

Blustein and Westphal are members of professor Joseph Ayer’s biomimetic research lab, which investigates the neural networks of various animal species and replicates them in robotic systems. The lab first began investigating the neural networks underlying behavior in lobsters and lamprey and to date has produced several generations of RoboLobsters and RoboLamprey that sense and respond to their environment using an electronic neural networks of neurons and synapses that mimics the animal model’s brain. These underwater systems are being developed for underwater mine countermeasures.

“There are many similarities between bees and lobsters,” said Blustein. While the other groups on the RoboBee team are working to master the body design and its sensory equipment, the bees wouldn’t get very far without an instinctive method for responding to the environment. Optical flow data collected by visual sensors on the bee’s head needs to be translated into adaptive movement in one direction or another.

“Most artificial intelligence-based robots are controlled algorithmically,” said Ayers, the principle investigator on the National Science Foundation-supported research. This means the designer must predict and generate computer programs for every possible contingency of the environment in which the robot operates, he explained. Animal behavior, in contrast, is controlled by neuronal and synaptic networks that the team mimics in what they call “biological intelligence.”

“We are adapting controllers derived from animal nervous systems to the control of robotics,” said Ayers. The unique thing with the honeybees is it’s the first time anyone has attempted neuronal-based bio-mimicry with a flying platform. Moving in three dimensions introduces a host of new complications, which the team is now addressing.

Colleagues at Harvard have introduced a group behavior component that will allow the RoboBees to not only to organize pollination missions, but to pass along what they’ve learned in some robotic version of the waggle dance.