Trusty robot helps us understand human social cues

You’re not sure why, but you don’t trust that guy. You wouldn’t give him a buck because you’re pretty sure he wouldn’t return the favor. What is it about him? Can you put your finger on it?

Despite decades of searching, scientists have not been able to identify the visual cues that help us determine a stranger’s trustworthiness. But humans are a pretty cooperative bunch, so they must be gleaning something from their nonverbal interactions that explains who to trust and who to be wary of.

In an article soon to be published by the journal Psychological Science, Northeastern University psychology professor David DeSteno and his colleagues reveal the mystery. The research suggests that a distinct set of silent cues — hand and face touching, for example, or arm crossing and leaning away — will betray humans’ bad intentions.

“There’s no one golden cue,” DeSteno said. “Context and coordination of movements are what matters.”

The research was also reported in The New York Times.

DeSteno’s team, which also included researchers from Cornell University and the Massachusetts Institute of Technology’s Media Lab, performed two experiments to unearth these findings. In the first experiment, which they called the exploratory phase, the researchers asked 86 Northeastern students to have either a face-to-face conversation with another person or to engage in a web-based chat session. The live conversations were video recorded and later coded for the amount of fidgeting the two participants demonstrated.

After the initial conversation, the same two people were asked to play a prisoner’s dilemma game, with real money (albeit not much) at stake. Players could either be selfish and make a lot of money for themselves, or they could be generous — and hope their partner would too — for a smaller but communal profit. As might be expected, participants were less generous when they didn’t trust the other player.

Players who engaged in face-to-face conversations were much better at picking out the less honest than those who only participated in online chats. And if someone displayed the tetrad of cues mentioned above, that person was less likely to be generous, and the partner would know it, even though she couldn’t say why.

“But the problem,” DeSteno said, “was that identifying the exact cues that matter is difficult. Humans express many things at once.”

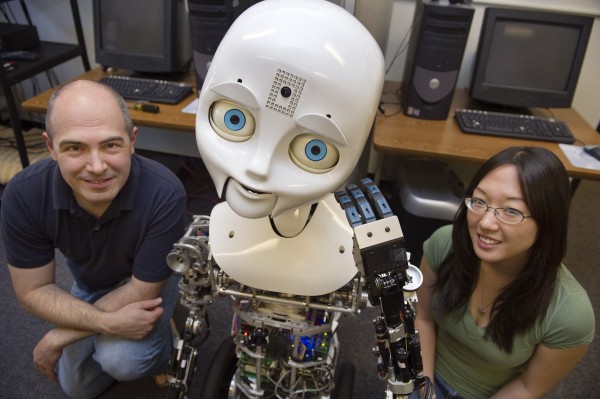

In order to validate the cue set, the team repeated the experiment. But this time, instead of talking with another Northeastern student, the participants conversed with a robot created by MIT’s Cynthia Breazeal — they call her Nexi.

Two experimenters behind the proverbial curtain controlled Nexi’s voice and movements. The participants were unaware of the experimenters and when they played the money game with Nexi later, they assumed they were playing with the robot. When Nexi touched its face and hands during the initial interview, or leaned back or crossed its arms, people did not trust it to cooperate in the game and kept their money to themselves.

By controlling the nonverbal cues participants received, the Nexi experiment confirms that the cue set revealed in the first experiment is not just a relic of over-fidgety participants, DeSteno said. But more than that, he said these additional results suggest that robots are capable of building trust and social bonds with humans. Our minds are willing to accept that fact and assign moral intent to technological entities.